The New Normal: How AI Tools Redefined "Exceptional" Developer Productivity (2020-2025)

Edgar Joya

VP Full Stack Development COE, Talavera Solutions

A data-driven analysis for engineering leaders navigating the AI revolution

Redefining "Exceptional" in the AI Era

Here's a question that engineering leaders across the industry have been asking: What does "exceptional" developer productivity even mean anymore?

At Talavera Solutions, developer productivity began shifting in early September 2025 when we adopted Cursor as a team. Initial improvements were incremental—code shipped slightly faster, features completed with fewer roadblocks. But nearly two months later, the effect has become exponential. What started as marginal gains has transformed into a fundamental acceleration in how we build software.

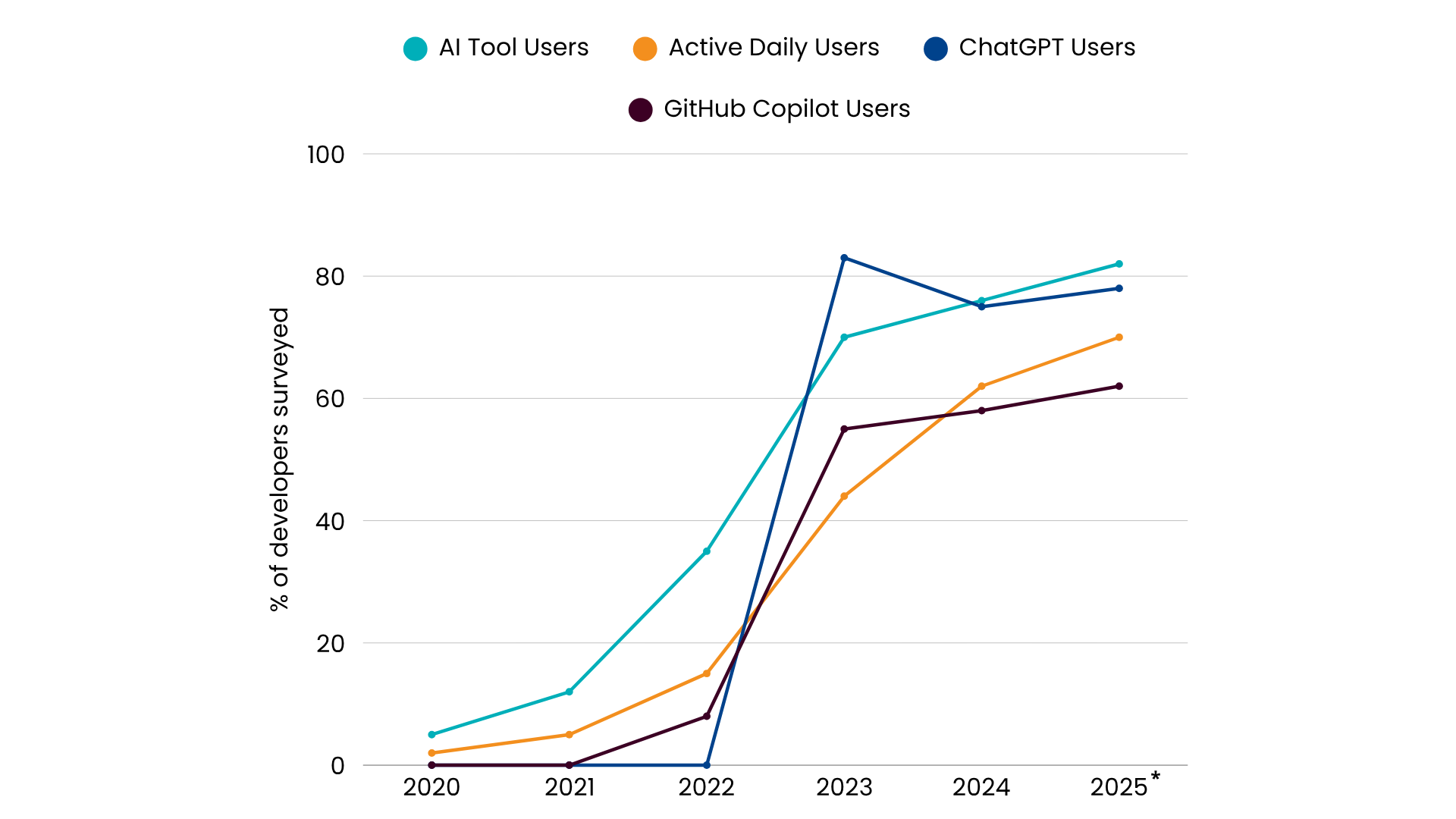

This observation led to a fundamental question: If developers are now 2-3x more productive than in 2020, and similar gains are happening industry-wide, what does "high performance" actually look like now? When conversations with peers—CTOs, VPs of Engineering, technical leaders—kept circling back to this same question, it became clear: the old benchmarks felt obsolete. If 76% of developers are using AI tools daily, has "exceptional" fundamentally changed?

The industry moved so fast from skepticism to ubiquitous adoption that it skipped the measurement phase. Tool vendors had studies showing speed improvements on controlled tasks, but what about the complete picture?

This research dives into every credible industry study, research paper, and survey from 2020 to 2025—Stack Overflow, DORA, GitHub, peer-reviewed research from MIT, Harvard, Georgetown, plus analyses from McKinsey, Gartner, Forrester—to answer:

The findings are more complex than the simple "AI makes everyone faster" narrative.

Stack Overflow's 2024 Developer Survey shows

76% of developers using or planning to use AI tools, with

62% actively using them daily. Individual developers complete simple tasks 26-56% faster. Code volume is up across the board.

But here's the paradox:

Google's 2024 DORA report found that despite positive individual impacts,

organizational delivery performance declined—by 1.5% in throughput and 7.2% in stability—for every 25% increase in AI adoption. GitClear's analysis of 211 million lines of code shows technical debt accumulating at unprecedented rates. Georgetown research reveals AI-generated code contains 41% more bugs and 322% more security vulnerabilities in some contexts.

The data tells a story that's both optimistic and cautionary. AI has raised the floor—yesterday's elite performance is today's average. But it's also changed what "productivity" means and what differentiates exceptional teams from the pack.

This article interprets what the data shows about developer productivity in the AI era—the opportunities and the tradeoffs.

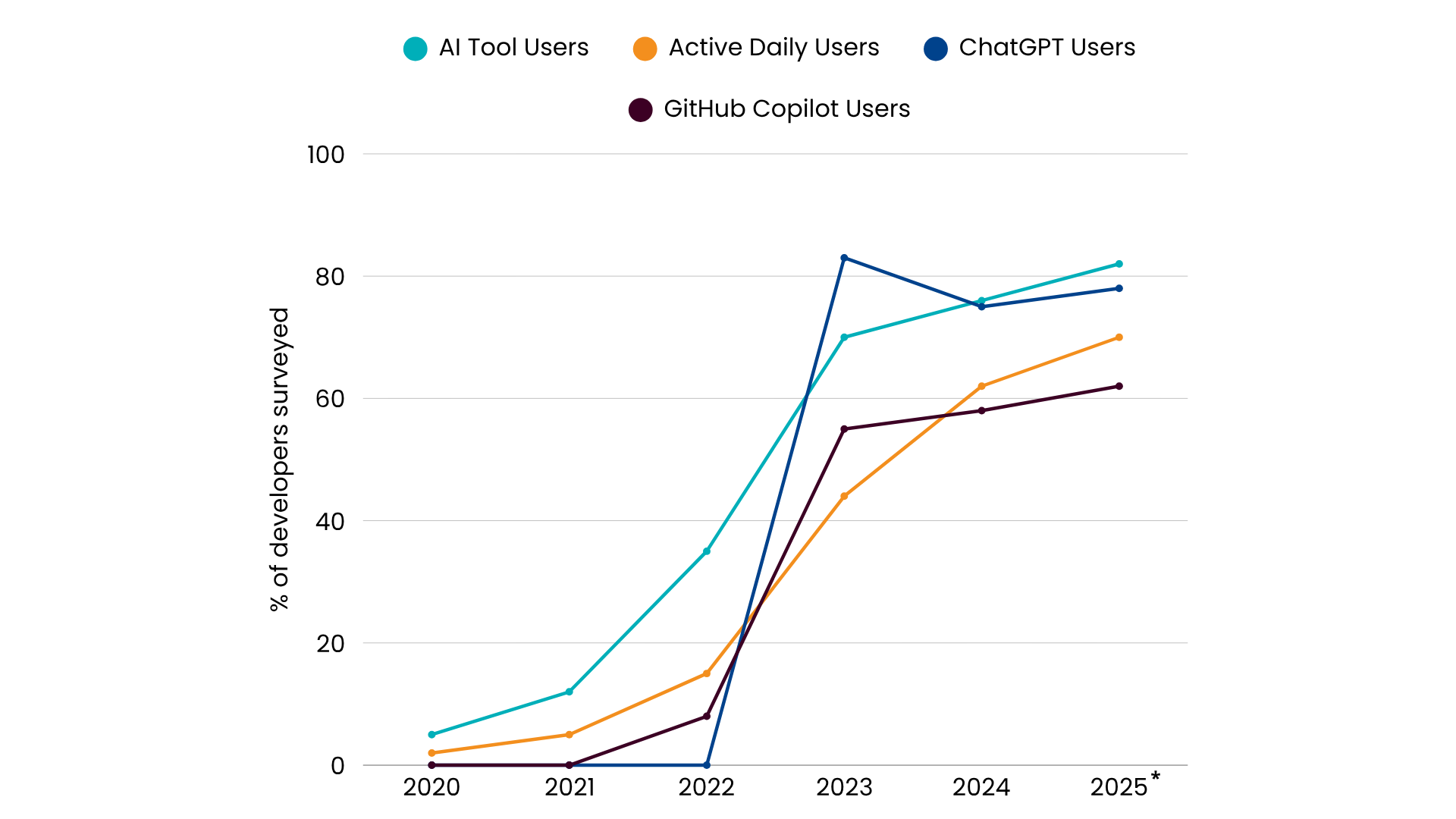

AI Adoption Surge (2020-2025)

The Baseline Years (2020-2021): Pre-AI Productivity Standards

The starting line for most teams was March 2020. The pandemic became an unplanned experiment in remote work.

Microsoft Research found remote work caused collaboration time with cross-group connections to drop by about 25%. Teams became more siloed.

Yet

GitHub's Octoverse Report for 2020 showed open source projects using automation saw a

36% increase in pull requests merged and 33% reduction in merge time. Longer hours, less broad collaboration, but more code shipped.

By 2021, teams adapted to permanent remote and hybrid models. Productivity recovered. The data revealed a new hierarchy of performance.

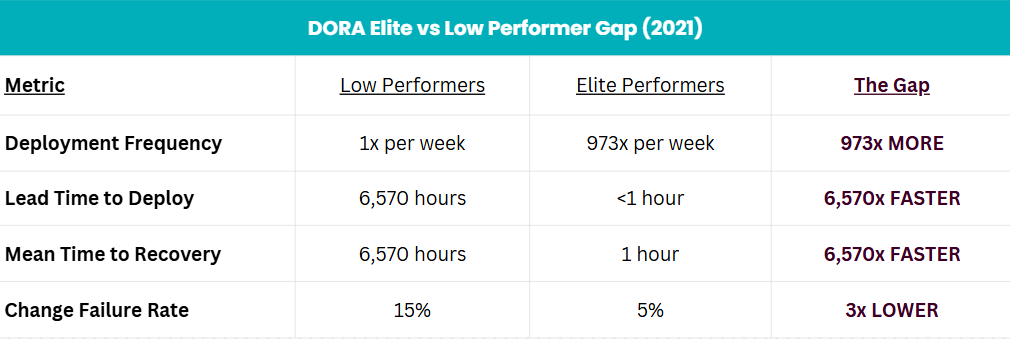

What "High Performance" Looked Like Pre-AI

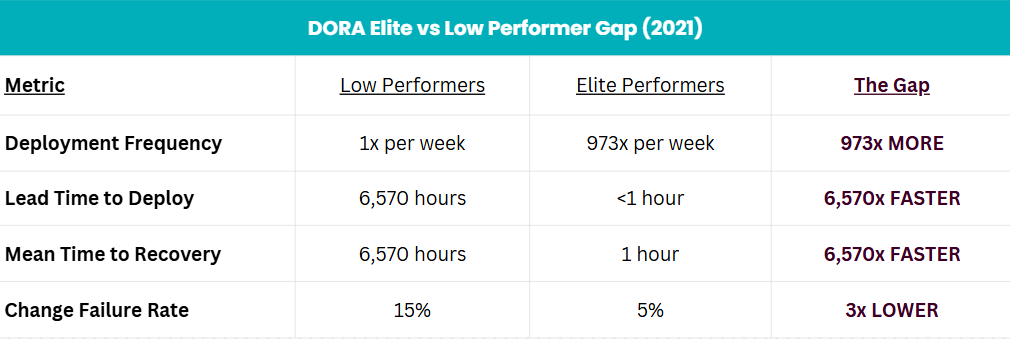

The

2021 DORA State of DevOps Report revealed absurd gaps:

elite performers deployed 973x more frequently than low performers. Lead time:

6,570x faster. Change failure rates:

3x lower. Recovery time:

6,570x faster.

These weren't anomalies—they were fundamentally different operating modes.

What created these gaps? Not coding speed. The differentiators were CI/CD maturity, DevOps culture, and "modern operational practices"—making teams

1.4 times more likely to report greater software delivery and operational performance.

In 2021, code volume was a reasonable proxy for team effectiveness. Teams shipping more code, more frequently, with fewer bugs were genuinely elite. Quality and velocity moved together.

DORA Elite vs Low Performer Gap (2021)

That assumption was about to break.

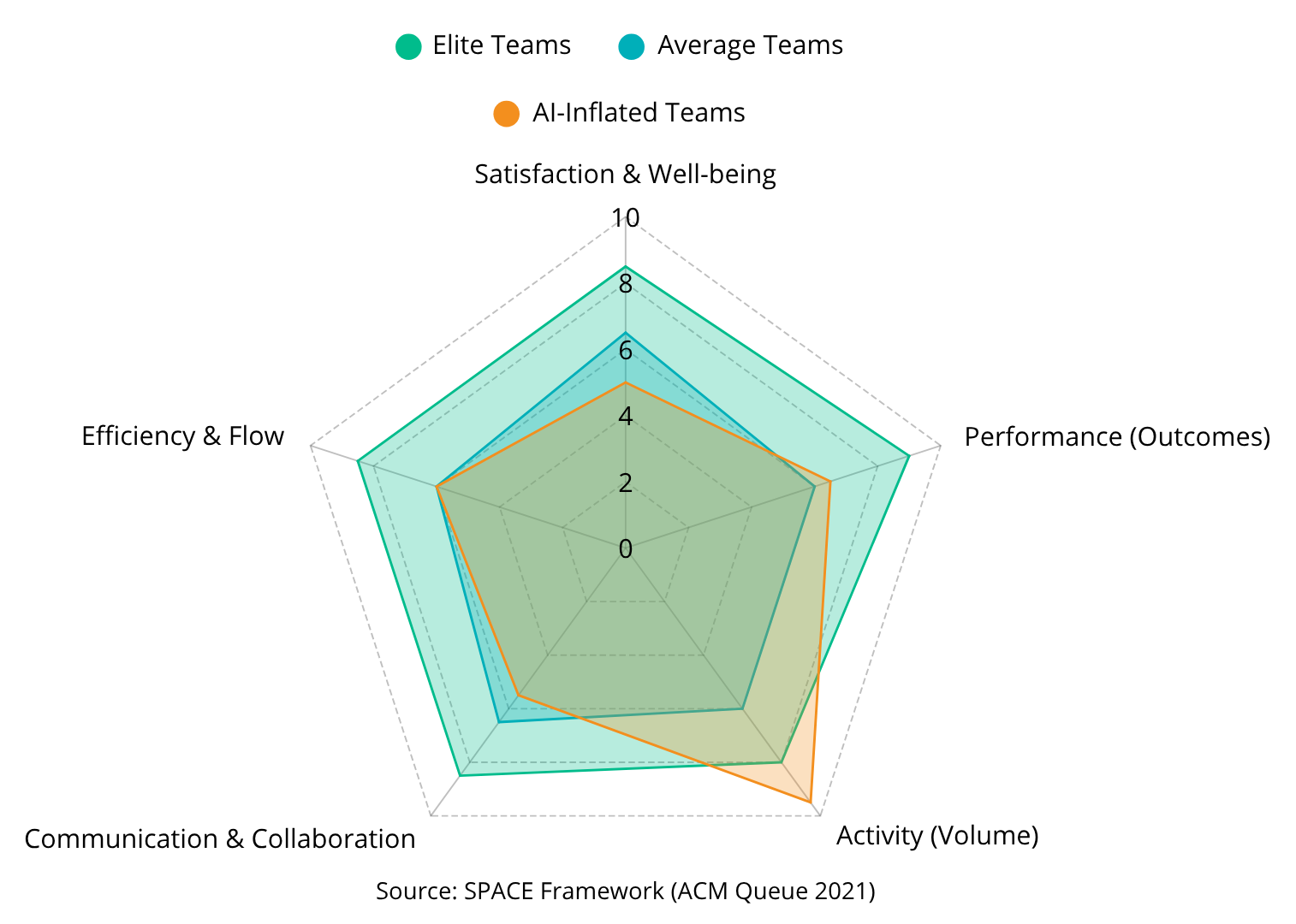

The

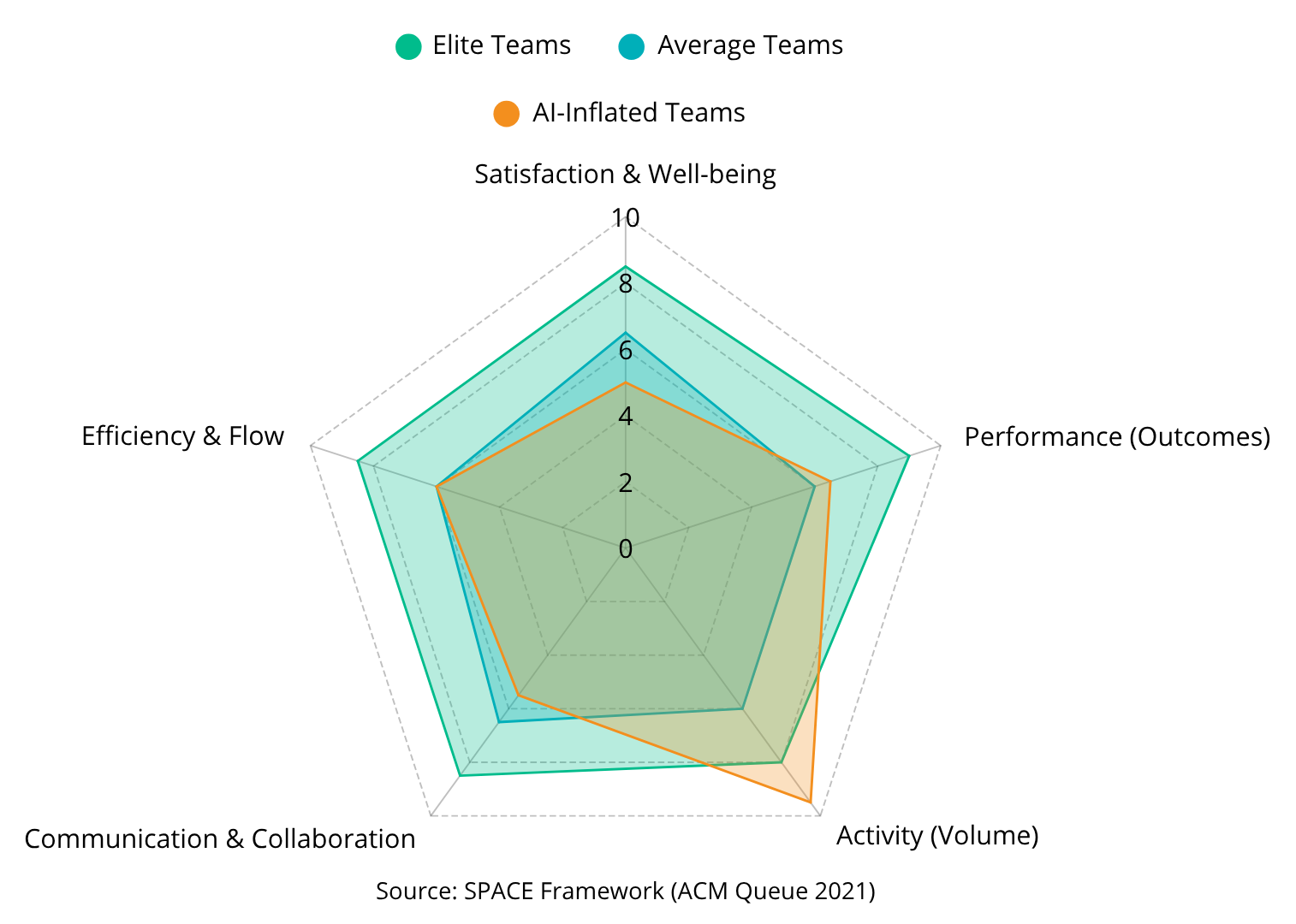

SPACE framework, published in ACM Queue in early 2021, warned that measuring productivity required five dimensions:

Satisfaction and Well-being, Performance, Activity, Communication and Collaboration, and Efficiency and Flow. Their key insight:

"Performance is often best evaluated as outcomes instead of output."The AI Acceleration (2022-2024): When Everything Changed

June 2022: GitHub Copilot went generally available.

Early research suggested 55.8% faster task completion. The 55.8% speed gain was real—but applied to simple tasks (writing an HTTP server) in familiar domains (JavaScript).

McKinsey's 2023 research found developers could complete

simple tasks up to 2x faster, but

complex tasks showed less than 10% improvement. Junior developers with under a year of experience were sometimes

7-10% slower on unfamiliar tasks.

Context matters enormously.

November 2022: ChatGPT launched. Within twelve months,

83% of developers had used it. Faster adoption than smartphones, cloud computing, or any development tool in history.

By 2023,

70% of developers were using or planning to use AI tools with

79% wanting to continue using it. But 2023 wasn't just about tools—it was economic pressure meeting technological opportunity. Layoffs meant smaller teams handling expanding scope. "Do more with less" became the mandate.

The Democratization Effect

By 2024, the

Accenture enterprise study of GitHub Copilot showed how AI transformed real engineering organizations:

67% of developers used Copilot at least 5 days per week.

81.4% installed the extension the same day they received a license.

Satisfaction data:

90% felt more fulfilled with their jobs. 95% reported enjoying coding more.

70% reported "quite a bit less mental effort" on repetitive tasks.

Developers accepted about

30% of Copilot's suggestions—the tool generates options, humans make judgment calls. Augmentation, not automation.

But

Atlassian's 2024 Developer Experience Report found

65% still experienced burnout despite 61% using AI tools.

77% reported INCREASED workload,

67% spent MORE time debugging AI code, and

62% said AI wasn't significantly improving productivity yet.

The gains are real but not evenly distributed, and they come with new challenges.

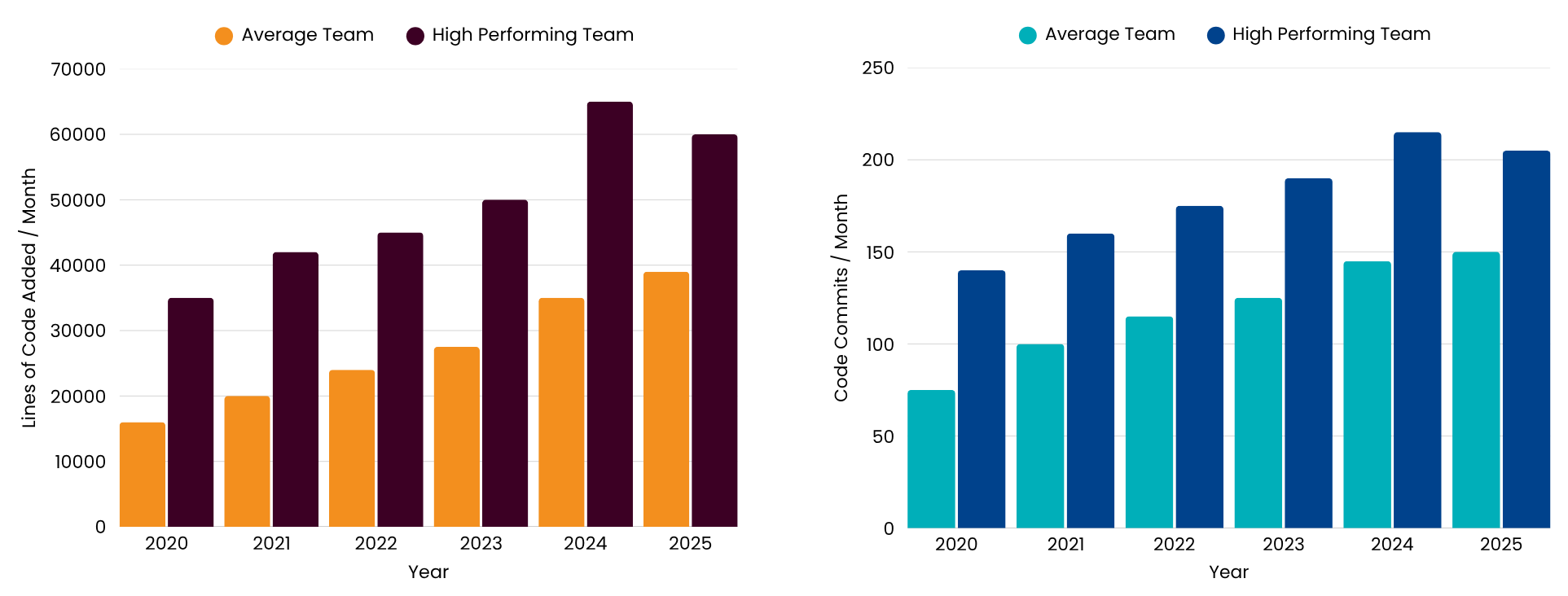

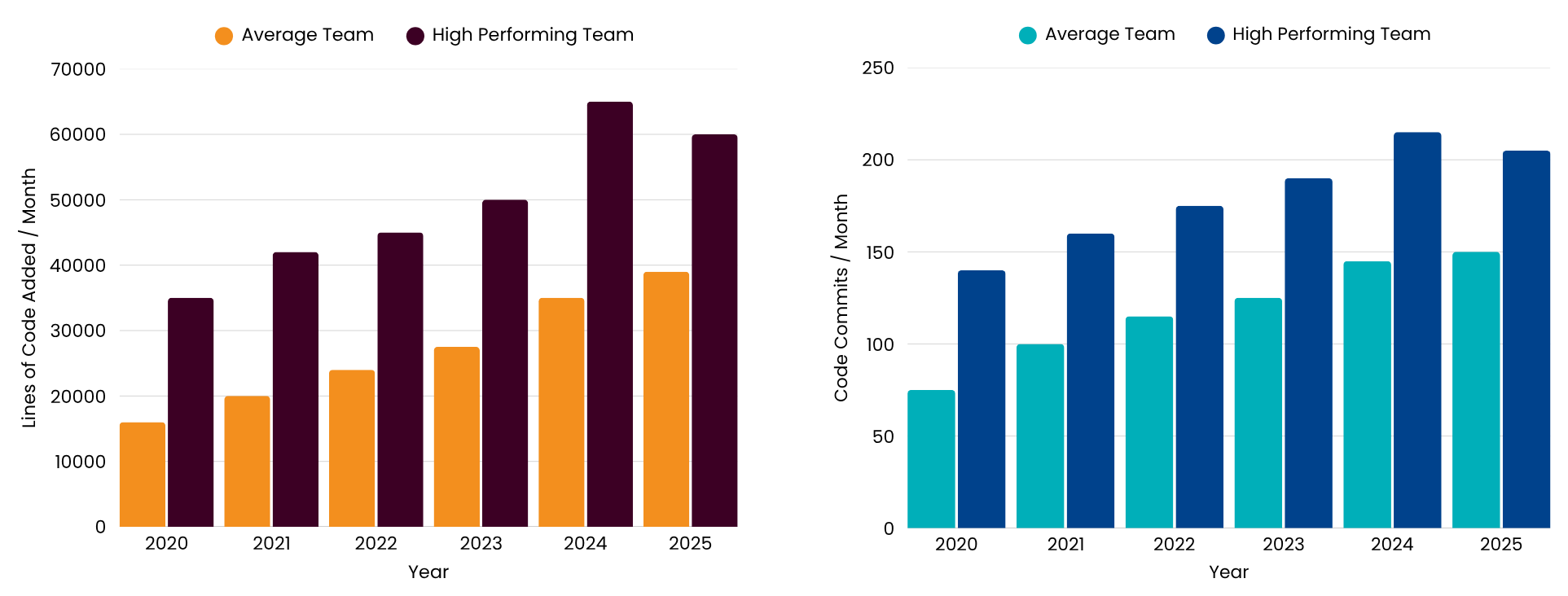

Productivity Benchmark Evolution (4-Person Team)

Remember those 2020 elite performer benchmarks? Average teams in 2024 were matching them. The gap was compressing.

The productivity paradox: when everyone has access to the performance enhancer, it doesn't make you faster than everyone else—it makes "fast" the new normal.

The Percentile Compression Effect

The Quality-Speed Tradeoff

Individual developers are faster. Teams generate more code. But is that code better?

GitClear's analysis of 211 million lines of code from 2020-2024:

1. Code churn expected to double in 2024 vs 2021 (7.9% vs 5.5%)

2. Code duplication increased 8x in 2024

3. Refactoring dropped from 25% (2021) to under 10% (2024)

4. Copy-paste code rose from 8.3% to 12.3%

API evangelist Kin Lane:

"I have never seen so much technical debt created in such a short period during my 35-year career."

Despite positive individual impacts (7.5% better documentation, 3.4% improved code quality, 3.1% faster code reviews),

organizational delivery metrics declined—

1.5% decrease in throughput and 7.2% reduction in stability per 25% AI adoption increase.

Root cause? Increased batch sizes. AI makes large changesets easy. But larger changesets are riskier, harder to review, more likely to introduce bugs. Code generation speed is outpacing human review capacity.

Georgetown University's 2024 cybersecurity research found AI frequently outputs insecure code, and developers using AI believe they've written more secure code than they actually have. AI-generated code contains

41% more bugs and

322% more privilege escalation paths in some contexts.

This isn't an argument against AI tools—it's an argument for using them deliberately. Successful teams treat AI output as a first draft requiring human refinement, not a finished product.

2025 and Beyond: The New Productivity Landscape

The shift in 2025 isn't about raw output.

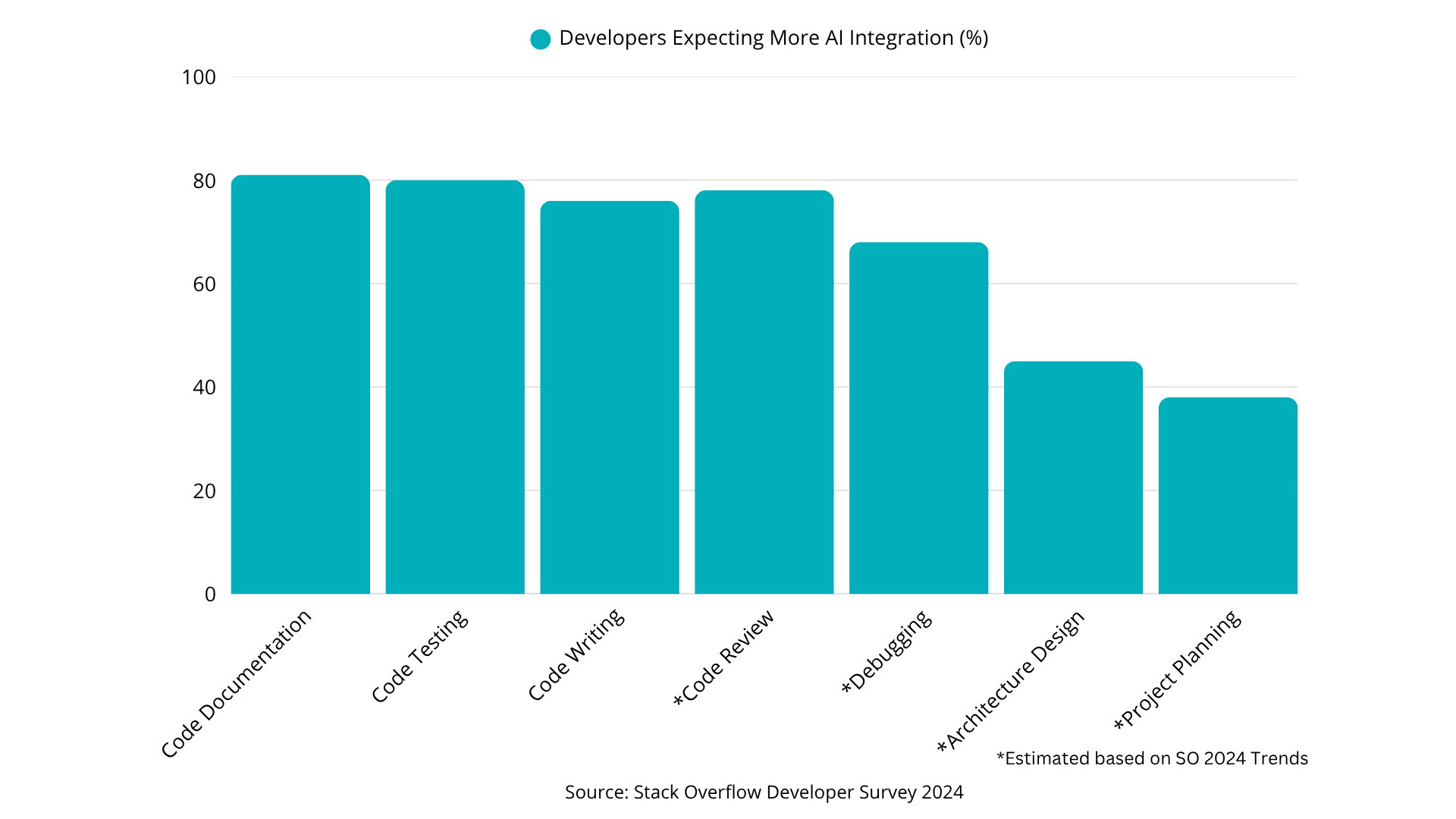

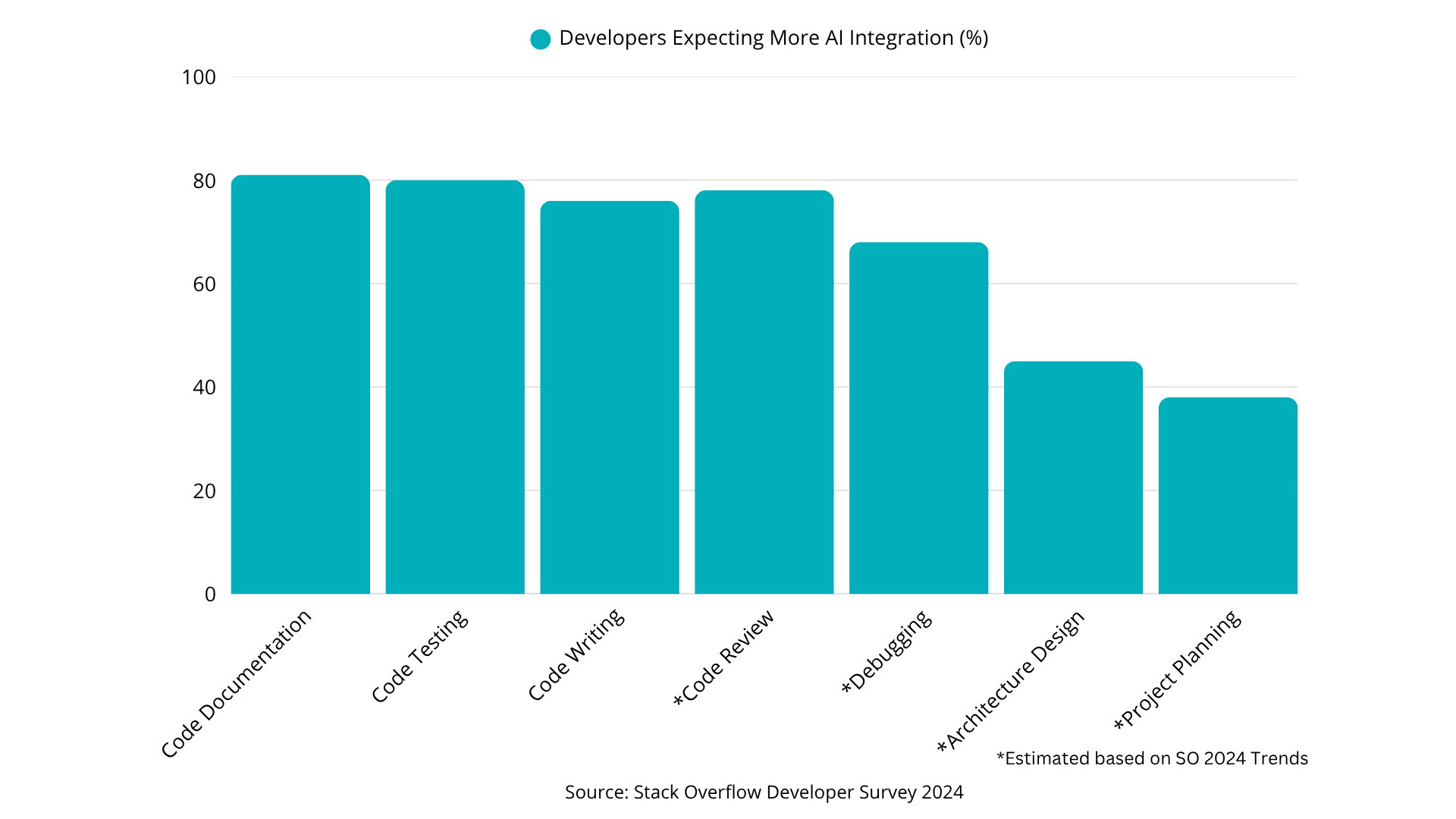

Stack Overflow's 2024 data shows

81% of developers expect AI integration in documentation, 80% in testing, 76% in code writing.

Focus is shifting from creation velocity to quality, documentation, and testing—the practices that matter months later when someone else modifies your code.

70% of professional developers do not perceive AI as a job threat. They recognize AI handles commodity work while human judgment, architectural thinking, and domain expertise become more valuable.

As one developer told

ACM's researchers:

"AI should feel like pair programming—sort of like Ironman and Jarvis... Make us more productive so that we can deliver better features faster."

But concerns are real:

79% worry about misinformation in AI results. 65% are concerned about source attribution.The honeymoon phase is over. The mature integration phase begins—figuring out what AI is actually good for versus what just makes work

feel productive.

What Actually Differentiates Elite Teams Now?

If AI gives everyone the ability to ship code faster, what separates exceptional teams?

Not volume alone. AI has made everyone competitive at that game.

1. Quality indicators over quantity metrics

Elite teams in 2025 show healthy deletion rates—35-45% of code added eventually gets removed or refactored. AI makes code generation easy. Humans decide which code survives.

2. Sustainable velocity over hero sprints

AI can help maintain flow state, but flow state differs from chronic overwork. Some teams treat AI productivity gains as an excuse to pile on more scope. Thriving teams use gains to reduce stress, improve work-life balance, and prevent burnout.

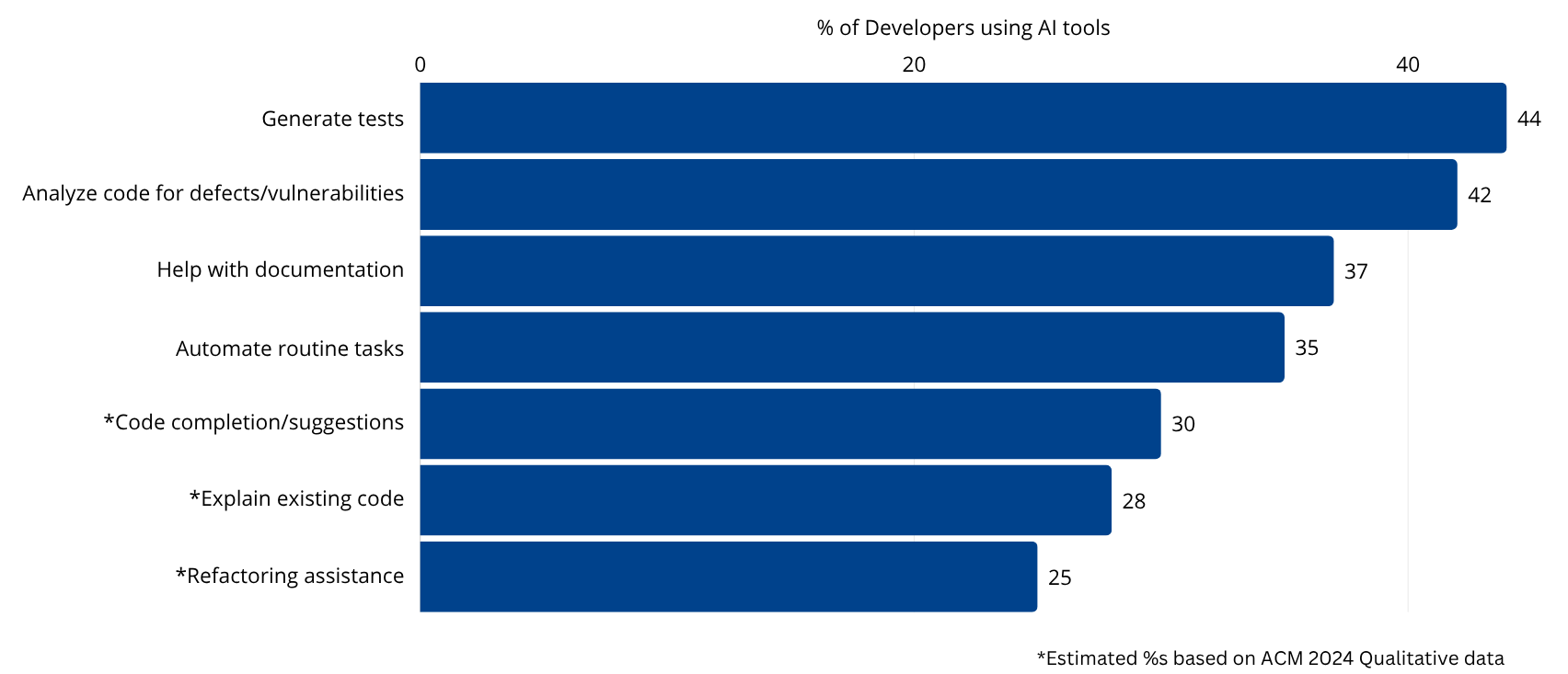

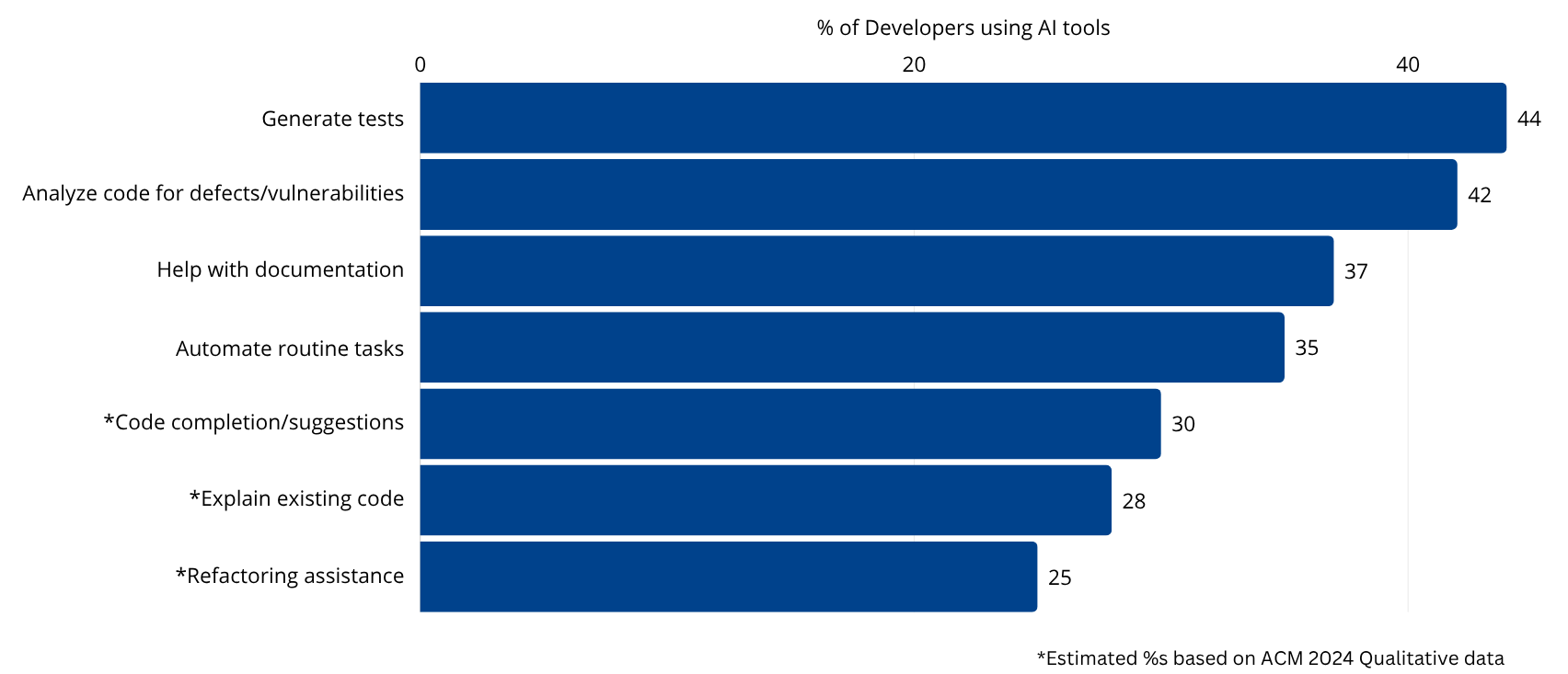

3. Effective AI usage patterns

Developers want AI to:

Elite teams use AI for these high-value tasks. Average teams use it for autocomplete.

Teams are learning which contexts AI helps most. Simple, familiar tasks in greenfield projects? High benefit. Complex problems in mature codebases? Minimal—sometimes negative. A

2025 randomized trial found experienced developers were

19% slower using AI on complex tasks in unfamiliar mature projects.

What Developers Want AI to Do (2024)

4. Systematic AI prompt organization and knowledge sharing

High-performing teams build institutional knowledge around effective AI usage. They capture what works: debugging prompts that identify edge cases, refactoring prompts that preserve intent, test generation prompts that go beyond happy paths.

This knowledge becomes team infrastructure—like code review standards or deployment practices.

5. Platform maturity and reduced friction

DORA research has been consistent: elite performance comes from

"both delivery and operational excellence."

AI tools don't fix broken deployment pipelines, flaky tests, or manual configuration. AI can make these problems worse by increasing code volume flowing through strained systems.

Teams pulling ahead invest in platform engineering—self-service infrastructure, automated testing, robust CI/CD. Without platform foundation, AI productivity gains hit a ceiling or accelerate technical debt.

Productivity Metrics That Matter (SPACE Framework)

Recalibrating Expectations: A Guide for Engineering Leaders

If you're benchmarking against 2020-2022 data, you're setting expectations that are obsolete or actively harmful.

The Volume Trap

AI-generated code without refactoring creates technical debt faster than manual approaches ever did. It's easier than ever to ship code—harder than ever to ship good code, because code volume requiring human review and architectural judgment has exploded.

Teams that succeed treat AI output as a starting point for human refinement. Teams that struggle ship AI's first draft and wonder why technical debt is ballooning.

What to Measure Instead

The

SPACE framework blueprint:

measure across at least three dimensions, include perceptual measures, avoid single-metric traps.

Stop over-indexing on:

1.

Lines of code written

2. Commits per developer

3. Features shipped per sprint (without quality gates)

Start measuring:

1. Deployment frequency

and change failure rate (DORA pairing)

2. Lead time

and time to recovery (velocity with resilience)

3. Code review quality and thoroughness (not just speed)

4. Developer satisfaction and flow state (sustainability)

5. Test coverage and defect escape rates (quality signals)

6. Code churn rates and refactoring activity (technical debt indicators)

Updated Benchmarks for 2025

Typical 4-person team (average):

1. 120-180 commits/month

2. 28,000-50,000 lines added/month

3. 36-44% churn rate

Sustainable high performers (top 25%):

1. 170-240 commits/month

2. 45,000-75,000 lines added/month

3. 38-46% churn rate

Warning signs: Extremely high output with low churn (AI code shipped without refactoring). Inconsistent velocity patterns (burnout cycles). High defect escape rates despite high velocity (quality gaps). Developer satisfaction declining despite productivity gains (unsustainable practices).

Confidence indicators: Healthy deletion rates (active refactoring). Balanced commit sizes. Consistent daily contribution patterns. High developer satisfaction alongside high productivity.

Context matters. Fintech teams on mature platforms differ from consumer app teams in rapid growth. Greenfield differs from legacy modernization.

Developer Perspective: Thriving in an AI-Augmented World

Developer Sentiment on AI Tools (2024)

75% of ChatGPT users want to continue using it. 90% of Copilot users felt more fulfilled. 95% reported enjoying coding more.

These aren't workforce replacement numbers—they're workforce augmentation numbers.

But the full picture: 65% still experience burnout. 77% report increased workload. 67% spend more time debugging AI code.

The Ironman/Jarvis Model

Tony Stark is still Iron Man. Jarvis makes him more effective, handles routine systems management, provides real-time information, flags issues. But Jarvis doesn't make strategic decisions or have creative insights.

The best developers use AI to handle routine work, preserve mental energy for complex tasks, and maintain judgment over what ships.

New Skills, New Value

Career advantage is shifting from "How fast can you code?" to "How good is your judgment?"

Code review of AI output matters more than prompt engineering. Architectural thinking matters more. Evaluating multiple AI-generated approaches and choosing the right one for specific context—that's the differentiator.

MIT/Harvard research across 4,867 developers found junior developers saw 21-40% productivity boosts, while seniors saw only 7-16%. On complex tasks in mature codebases, experienced developers were sometimes slower with AI.

Career dynamic: AI helps most when learning, less when expert. Sustainable careers focus on developing deep expertise, not maximizing AI throughput.

Building Your Personal AI Knowledge Base

Developers are building personal repositories of proven prompts, organized by task type. Not just saving prompts—documenting context requirements, modifications for different scenarios, what to avoid.

Like maintaining dotfiles or snippet libraries, but for AI interactions. The differentiator isn't access to tools—it's mastery of effective communication with them.

The Future of Development Productivity (2026 and Beyond)

AI adoption will continue, but marginal productivity gains will plateau. When 90%+ of developers use AI tools, competitive advantage shifts to

how teams use them, not

whether they use them.

81% expect more integration in documentation, 80% in testing, 76% in code writing. Notice the descending priority moving earlier in the development lifecycle. Fewer expect AI help with architecture design or project planning.

Developers intuitively understand AI's value is in execution and quality assurance, not strategic thinking.

Expected AI Integration by Workflow Stage (2024)

The Next Frontier

The next wave isn't better autocomplete—it's AI-native architectures designed from the ground up assuming AI is part of workflow. Rethinking code structure, documentation approaches, and testing strategies for AI-augmented teams.

The real shift: context-aware assistance understanding specific codebases, team conventions, architectural patterns. Generic autocomplete becomes contextually intelligent collaboration.

What Remains Human

Product intuition—understanding what users actually need versus what they say they want. AI can implement; it can't empathize with user frustration or anticipate unstated needs.

Cross-functional collaboration—working with product, design, sales, support to understand business context. AI can't navigate organizational politics or build trusted relationships.

Architectural judgment—making decisions optimizing for maintainability, scalability, and team velocity over 2-3 years, not 2-3 sprints. AI can suggest patterns; it can't evaluate long-term tradeoffs.

Creative problem-solving—finding novel approaches to genuinely new problems. AI excels at pattern matching existing solutions. It's less good at creative leaps.The best teams in 2027 will look more like product companies than coding factories. The bottleneck won't be "how fast can code be written"—it'll be "how well do teams understand what to build and why."

Conclusion: Embrace the Shift, Measure What Matters

AI didn't make developers obsolete. It raised the floor and changed the game. Yesterday's exceptional performance is today's baseline. That's not a crisis—it's an opportunity to redefine excellence.

For engineering leaders:

Stop using outdated benchmarks. 2020 data is obsolete. Adjust expectations or risk demoralizing teams or missing actual underperformance.

Stop chasing volume metrics alone. Code per sprint is a vanity metric. DORA data shows individual code volume increasing while organizational delivery performance declines.

Start measuring sustainability and quality. The

SPACE framework requires

"metrics across at least three dimensions" including perceptual measures. Developer satisfaction, flow state, and sustainable pace matter as much as deployment frequency.

Invest in platform engineering. Without platform foundation, AI just accelerates technical debt.

Treat AI output as first drafts. Winning teams have strong code review, architectural oversight, and security review processes.

For developers:

Value isn't in typing speed anymore. It's in judgment, context, and creative problem-solving.

Quality over quantity. Well-architected 10,000 lines beats hastily generated 50,000 lines. Every time.

Invest in deep expertise. AI handles commodity work. Deep domain knowledge and specialized skills become more valuable.

Know when to use AI and when to trust expertise. AI excels at simple, familiar tasks in greenfield projects. It struggles with complex problems in mature codebases.

The path forward: embrace AI tools as the productivity baseline they've become, but recognize sustainable excellence requires the same things it always has—quality, thoughtfulness, and focus on outcomes over output.

The AI revolution didn't change what makes great engineering teams great. It just made it harder to fake greatness with volume alone.

Want to discuss building multi-dimensional technical talent for your organization? Reach out to me at gabe@talaverasolutions.com to explore how strategic talent development can accelerate your business outcomes. Peer-Reviewed Research

1. Forsgren, N., Storey, M., et al. (2021). "The SPACE of Developer Productivity." ACM Queue, Vol 19, Issue 1. https://queue.acm.org/detail.cfm?id=3454124

2. Yang, L., et al. (2021). "The effects of remote work on collaboration among information workers." Nature Human Behaviour, 5(9), 1136-1144. https://www.nature.com/articles/s41562-021-01196-4

3. Peng, S., et al. (2023). "The Impact of AI on Developer Productivity: Evidence from GitHub Copilot." arXiv:2302.06590. https://arxiv.org/abs/2302.06590

4. METR Research (2025). "AI Assistance for Experienced Open-Source Developers: A Randomized Controlled Trial." https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/ Industry Reports & Surveys

Article written from verified research and industry data spanning 2020-2025. All statistics cited from peer-reviewed research, industry surveys, or verified corporate studies.

About the Author

Edgar Joya is VP of Full Stack Development COE at Talavera Solutions, where his team has been systematically tracking AI usage patterns and productivity metrics since early 2023. This research—analyzing over 1 billion tokens of AI interactions across development teams—led to insights about what separates high-performing AI-assisted teams from average ones, and ultimately to the creation of PromptShelf.ai, a platform helping development teams organize and share the AI prompts driving their productivity. He believes the next competitive advantage belongs to teams who treat AI collaboration as systematically as they treat code quality.

About Talavera Solutions

Is a technology company delivering innovative products and services that empower businesses to thrive in the AI era. Specializing in AI-powered development, digital transformation, and strategic technology consulting, Talavera combines deep industry expertise with agile execution to solve complex challenges and accelerate growth for clients worldwide. Learn more at

talaverasolutions.com.

About PromptShelf.ai

PromptShelf.ai is an intelligent prompt management platform for engineering teams navigating the AI-augmented development landscape. Built by Talavera Solutions based on research analyzing over 1 billion tokens of AI interactions, it provides infrastructure for organizing, sharing, and refining the AI prompts that drive team productivity—with version control, collaborative libraries, and performance analytics. Whether you're building personal prompt repositories or establishing team-wide AI usage standards, PromptShelf makes AI effectiveness systematic instead of scattered. Start free at

app.promptshelf.ai.

_Tal-Sol-log-vert_color-3-1.png)